|

Overview:

Rolls and colleagues discovered face-selective neurons in the inferior

temporal visual cortex; showed how these and object-selective

neurons have translation, size, contrast, spatial feequency and

in some cases even view invariance; and showed how neurons encode

information using mainly sparse distributed firing rate encoding. These

neurophysiological investigations are complemented by one of the few

biologically plausible models of how face and object recognition are

implemented in the brain, VisNet. These discoveries are complemented by

investigation of the visual processing streams in the human brain using

effective connectivity. Key descriptions are in 639, B16, 656, 682 and 508. An account of how some of the discoveries were made is provided in section 2 of 692.

The discovery of face-selective

neurons (in the amygdala (38, 91, 97), inferior temporal visual cortex (38A,

73,

91,

96, 162), and orbitofrontal cortex (397))

(see 412, 451, 501, B11, B12, B16).

Our discovery of face selective neurons in the inferior temporal visual

cortex was published in 1979 (Perrett, Rolls and Caan 38A), and in the amygdala was published in 1979 (Sanghera, Rolls and Roper-Hall 38), with follow-up papers in 1982 (Perrett, Rolls and Caan, 73), 1984 (Rolls 91), and for the amygdala in 1985 (Leonard, Rolls, Wilson and Baylis 97).

These discoveries were followed up and confirmed by others (Desimone, Albright,

Gross and Bruce 1984 J Neuroscience; see 682). The discovery of face neurons is

described by Rolls (2011 501), 2024 692 section 2).

The discovery of face

expression selective neurons in the cortex in the superior temporal

sulcus (114,

126) and orbitofrontal cortex (397). The discovery of reduced connectivity in this system in autism (541, 609).

The discovery that visual

neurons in the inferior temporal visual cortex implement translation, view, size, lighting, and spatial frequency

invariant representations of faces and objects (91, 108, 127, 191, 248, B12, B16).

The effective connectivity of

the human prefrontal cortex using the HCP-MMP human brain atlas has

identified different systems involved in visual working memory (660).

The discovery that in

natural scenes, the receptive fields of inferior temporal cortex

neurons shrink

to approximately the size of objects, revealing a mechanism that

simplifies

object recognition (320, 516, B12, B16).

Top-down

attentional control of visual processing by inferior temporal cortex

neurons in

complex natural scenes (445).

The discovery that in

natural scenes, inferior temporal visual cortex neurons encode

information

about the locations of objects relative to the fovea, thus encoding

information

useful in scene representations (395, 455, 516).

The discovery that the inferior

temporal visual cortex encodes information about the

identity of objects, but not about their reward value, as shown by reward reversal and devaluation investigations (32, 320, B11). This provides a foundation for a key principle in primates

including humans that the reward value and emotional valence of visual stimuli are

represented in the orbitofrontal cortex as shown by one-trial reward reversal learning and devaluation investigations (79, 212, 216) (and to some extent in the amygdala 38, 383, B11), whereas before

that in visual cortical areas, the representations are about objects and stimuli independently

of value (B11, B13, B14, B16). This provides for the separation of emotion from perception (B11, B16, 674).

The discovery that information

is encoded using a sparse distributed graded representation with

independent

information encoded by neurons (at least up to tens) (172, 196, 204, 225, 227,

321, 255, 419, 474,

508, 553, 561, B12, B16). (These

discoveries argue against ‘grandmother cells’.) The representation is

decodable

by neuronally plausible dot product decoding, and is thus suitable for

associative computations performed in the brain (231, B12).

Quantitatively

relatively little information is encoded and transmitted by

stimulus-dependent

('noise') cross-correlations between neurons (265, 329, 348, 351, 369, 517). Much of the

information is available from the firing rates very rapidly, in 20-50

ms (193,

197, 257, 407). All these discoveries are fundamental in our

understanding of

computation and information transmission in the brain (B12, B16).

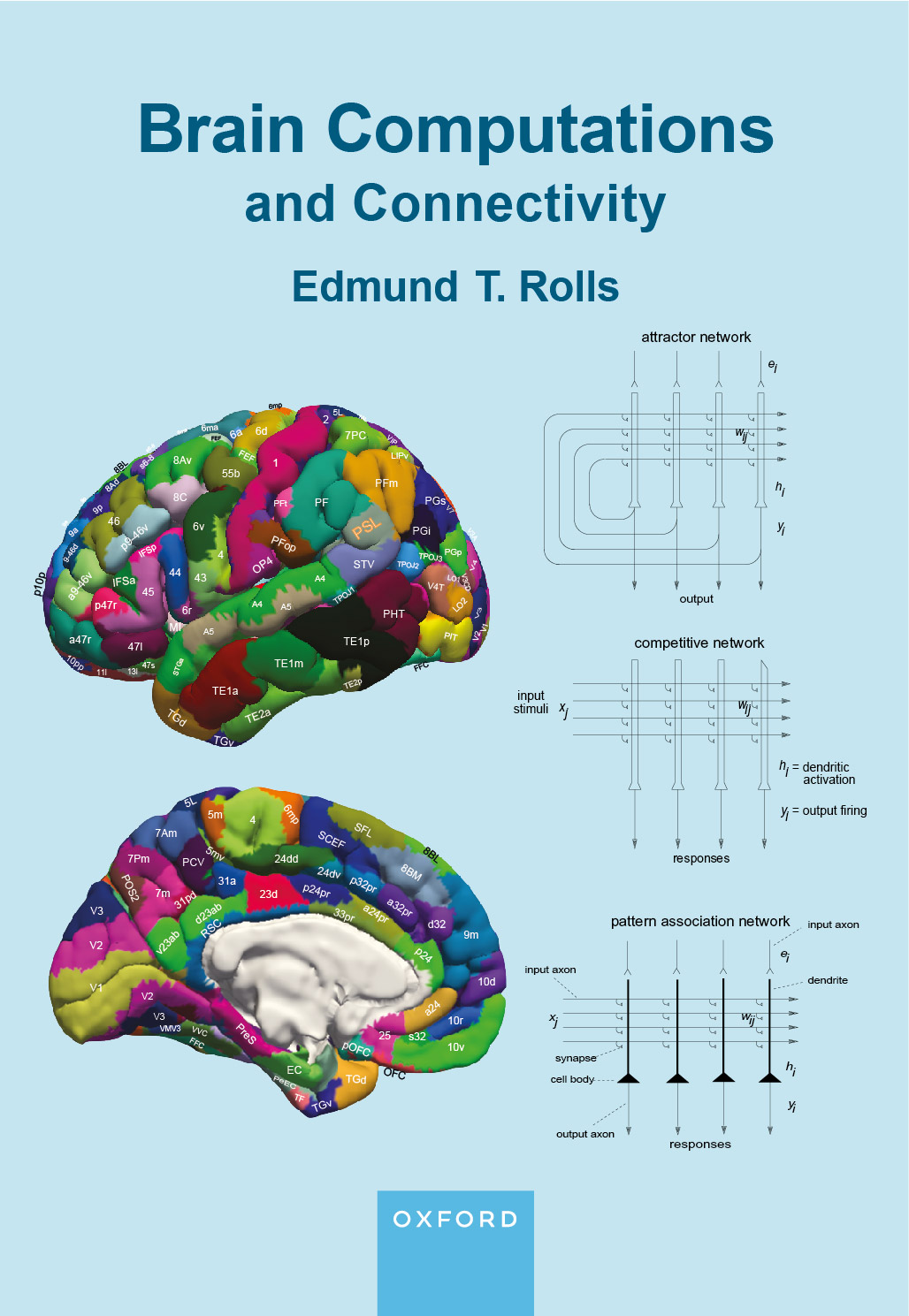

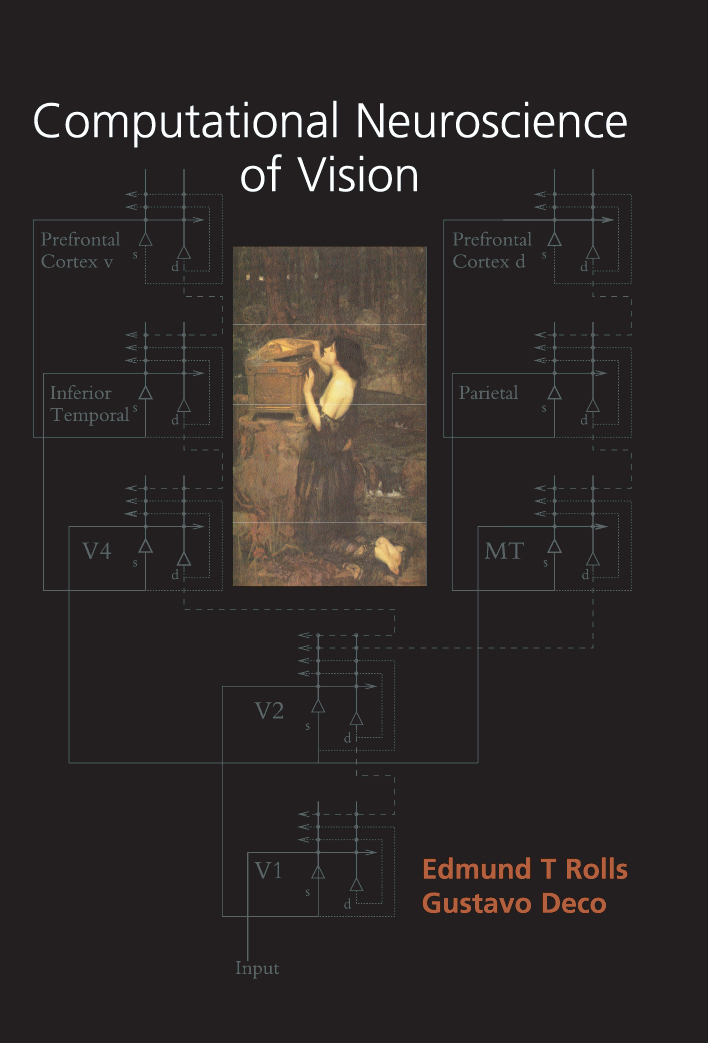

A

biologically plausible theory and model of invariant visual object recognition in the ventral

visual

system, VisNet, closely related to empirical discoveries (162,

179,

192,

226,

245,

275,

277,

280,

283,

290,

304, 312,

396,

406,

414,

446,

455, 473,

485, 516, 535,

536, 554, B12, 589, 639, B16, 703).

This approach is unsupervised, uses slow learning to capture

invariances using the statistics of the natural environment, uses only

local synaptic learning rules, and is therefore biologically

plausible in contrast to deep learning approaches with which it is contrasted (639, B16, 703).

A further advance towards biological plausibility is use of a synaptic

learning rule with, in addition to long-term potentiation,

heteroynaptic long-term depression that depends on the strength of the

synaptic weight, which removes the need for normalization of synaptic

weight vectors, and improves performance (703).

A theory

and model of coordinate transforms in the dorsal visual system using a

combination of gain modulation and slow or trace rule competitive

learning. The theory starts with retinal position inputs gain modulated

by eye position to produce a head centred representation, followed by

gain modulation by head direction, followed by gain modulation by

place, to produce an allocentric representation in spatial view

coordinates useful for the idiothetic update of hippocampal spatial

view cells (612).

These coordinate transforms are used for self-motion update in the

theory of navigation using hippocampal spatial view cells (633, 662, B16).

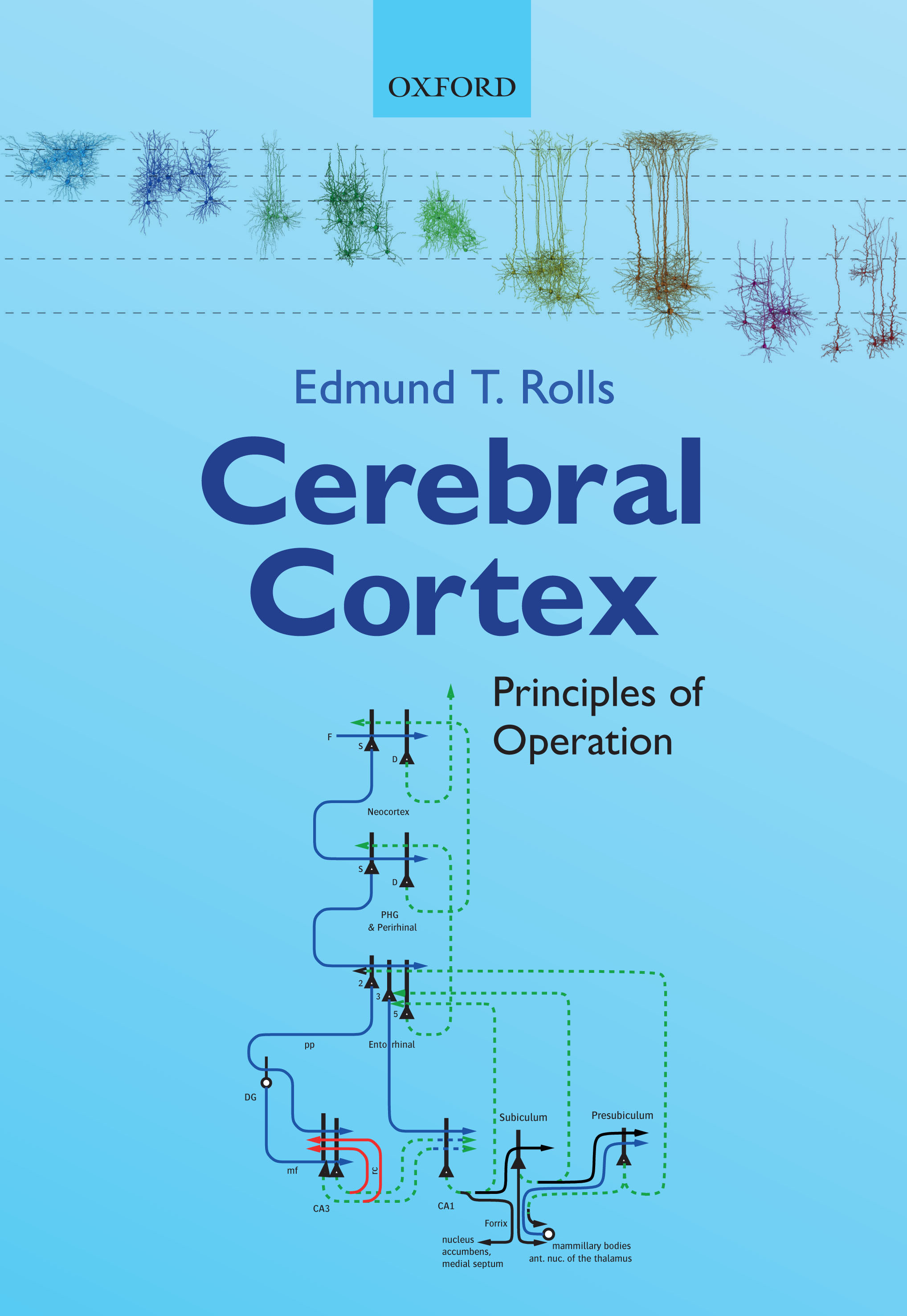

The

effective connectivity of the human visual cortical streams using the

HCP-MMP human brain atlas has identified different streams (656, B16, 682, 676, 685, 686, 688, 695).

A Ventrolateral Cortical Visual ‘What’ Stream for object and face recognition

projects hierarchically to the inferior temporal visual cortex which

projects to the orbitofrontal cortex for reward value and emotion, and

to the hippocampal memory system (656, 676, 685, 695).

A Ventromedial Cortical Visual ‘Where’ Stream

for scene representations connects to the parahippocampal gyrus and

hippocampus (656, 688, 685, 695). This is a new conceptualization of 'Where' processing for

the hippocampal system, which revolutionizes our understanding of

hippocampal function in primates including humans in episodic memory

and navigation (686, 682, 662, 692, 702).

A computational theory and model of how visual feature combination

neurons produce spatial view cells along the ventromedial scene pathway

that are linked together in a continuous attractor network in the

medial parahippocampal cortex and hippocampus to form allocentric scene

representations links together many of the empirical discoveries (696).

A Dorsal Visual Stream connects via V2

and V3A to MT+ Complex regions (including MT and MST), which connect to

intraparietal regions (including LIP, VIP and MIP) involved in visual

motion and actions in space. It performs coordinate transforms for

idiothetic update of Ventromedial Stream scene representations (682, 662).

An

Inferior bank STS (superior temporal sulcus) cortex Semantic Stream receives

from the Ventrolateral Visual Stream, from visual inferior parietal

PGi (655), and from the ventromedial-prefrontal cortex reward system (649) and connects to

language systems (682, 654). A Superior bank STS cortex Semantic Stream receives visual

inputs from the Inferior STS Visual Stream, PGi, and STV, and auditory

inputs from A5, is activated by face expression, motion and

vocalization, and is important in social behaviour, and connects to

language systems (656, B16, 682, 654, 685).

In addition, I have

shown how the hippocampal episodic memory system has connectivity to

anterior temporal lobe multimodal including visual regions, and to

inferior parietal visual cortical regions, and have produced a theory

and model of how the hippocampal episodic memory inputs how these

inputs could help to form semantic memories (694).

These discoveries

on the connectivity of visual cortical regions in humans have been

anchored to different functions by analysis of the activations to views of places,

faces, body parts, and tools, which show a whole set of different

cortical regions activated from medial to lateral in the ventral

temporal lobe, and also activation of semantically relevant cortical

regions such as motion regions activated by the sight of stationery

tools (685).

Moreover, it was discovered that the different functional

connectivities to each of these types of visual stimuli reflected some

of the semantic properties of each type of visual stimulus (685).

Some of the spatial view cortical regions were further revealed and

confirmed during the performance of an episodic memory task when

spatial stimuli were used (690), and have been found to be right lateralised especially in males.

Binaural

sound recording to allow 3-dimensional sound localization (11A,

UK provisional

patent, Binaural sound recording, B16).

|